User journey

In Pre-Crime you essentially play two personae: you and DigitalYou. The prologue of the simulation places you directly into the system. By interrogating you, you are added to the dataset, inducted into the memory system.

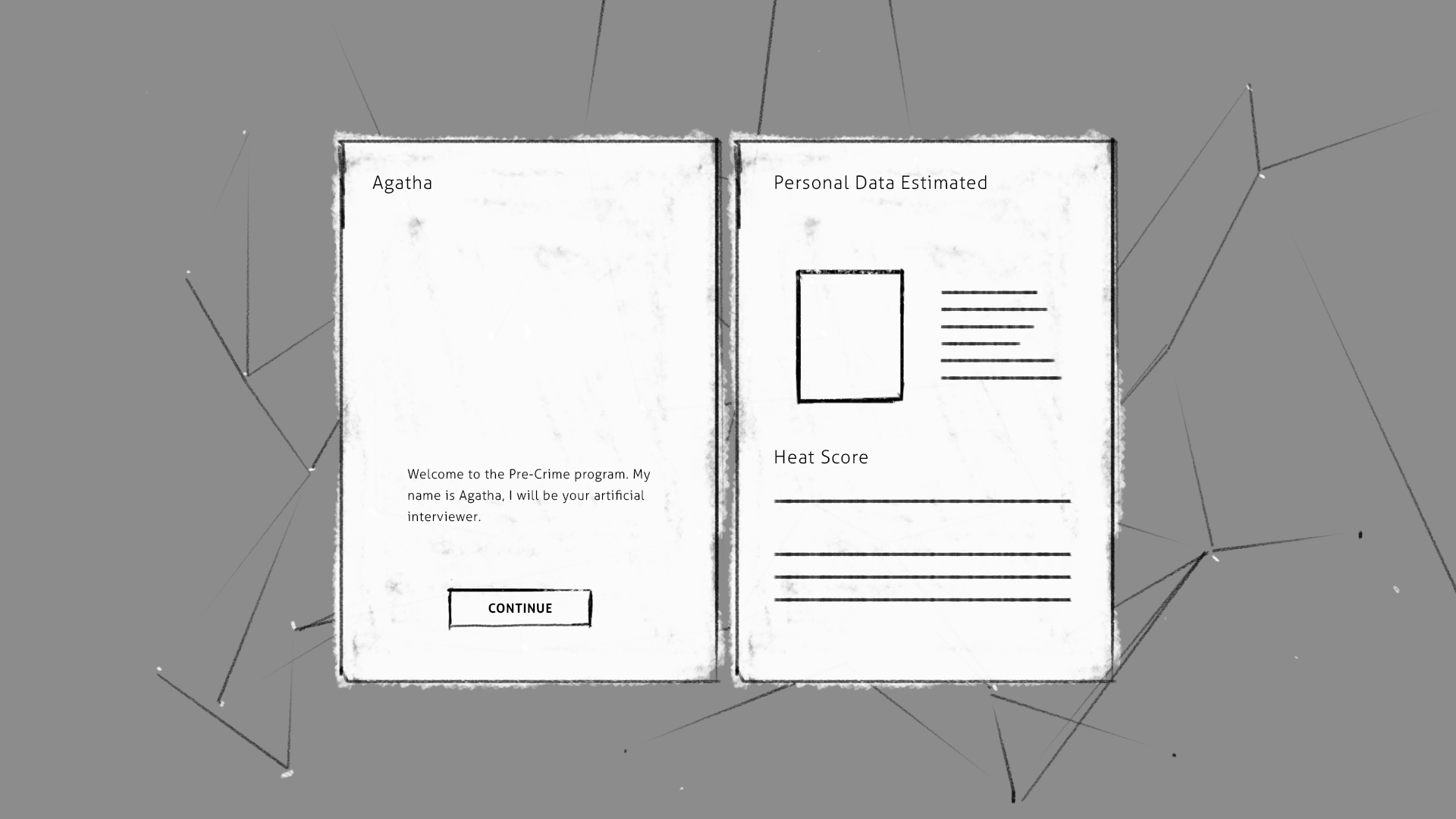

The aim of this personalizing aspect is to view yourself through the eyes of the machine, which becomes your digital mirror. The system will ask you for data through a conversation with an AI which we call Agatha.

Your DigitalYou will be then localized in our simulated city via open source maps and open source data and geolocation. Your DigitalYou will get its own score by facial recognition program and also by the answers that you will enter.

As a result of this interrogative process your DigitalYou is assigned a certain score – your Risk Assesment Score which places you on a scale how much you could be involved in a crime in the near future – as a victim or perpetrator. Once you get your score, and your place within the city, the system will calculate the probability of DigitalYou being involved in crime in the next couple of weeks. Will this prediction come true?

Your Digital Avatar will also its own ‘life’ on the grid, due to its score, its pre-crime predictions but also due the machine-self learning process, it’ll make changes to its own character according to the algorithm. The system takes decisions which you can’t control, the algorithm draws its own conclusions, it fills out the blanks and it’ll get back to you - you lose control over your own persona which assumes its own life.

As you are not the only one playing the game, your digital persona will end up on grids of other users (and you will see their personas on your grid). Once you will end up on their lists and on their grids, you will get notified by the system that you are a suspect. This will be achieved by sudden life gaming tools, such as instant messaging, emails, phone calls. This system will essentially become collective conscience - every person of the audience is actually policing your ‘DigitalYou’.

Once the score is set and the prediction is calculated, the DigitalYou is released into the anonymity of the database, to resurface now and again throughout the simulation, as the ScientistYou rolls up their sleeves and gets to serious police work.

As ScientistYou, your aim is to develop a pre-crime system in a kind of Gotham or Babylon of all cities of every person who logs into the simulation - a hybrid collage from all over the world, where every person will recognize their own street and neighbourhood but around the corner there might be a completely different part of another city.

In the course of your investigative duties, you will zoom out of one part of the city and see other citizens and their scores in other parts. Tap into profiles and see videos of some people that have their own story about predictive policing, either as a perpetrator or a victim.

For every task you will have limited amount of time to react. According to the crime, you have to chose data sets for your algorithm, then the algorithm and then select ‘generate prediction’.

A prediction takes place and sent out to the police which will start patrolling without any way to undo your choices.

After completing each task, you will get a summary about your algorithm’s success or misjudgment..

After your summary, there will be consequences – either rewards or punishments that will actually take your DigitalYou into account. So if your make bad predictions as the scientist, your DigitalYou avatar could end up paying for it (for instance, it can get arrested and thus its crime score will get increased in the next round). If you want to win the game, you should keep innocent people (including yourself) out of prison, while stopping crime that otherwise would have happened.

As you approach your next task, avoid making the same mistakes. You will start with easy tasks to solve crimes but the longer you play, the more complicated they will get – as in a serious game.

The experience can last either ten minutes or three hours – any kind of user will have theirown experience of predictive policing by either fast forward the results or diving deep intothe tasks. What happens when you tweak the parameters differently next time? The longer you spend on the website, the more it gives you a strategic gaming experience: what universe am I creating with my predictive policy in the end? Your past choices will be remembered, and can have long term consequences within your simulated world. Can you safely let the code out into the world or can it become a dangerous weapon when left to its own devices? And all in all, are we able to develop a 100% objective pre-crime software when we know that our data always carries the risk of bias?